Three-Act Structure is one of the most common tools in setting up a successful narrative. It is used heavily in cinema. However its usefulness in game writing is still under dispute. In this article I will have a look at the basic concepts of this approach and discuss its usefulness in regard to game design. I will first outline what is meant with Three-Act Structure and how it is commonly applied in mainstream cinema. Later on I will take a look at the structure of classical arcade games to draw some conclusions.

Three-Act Structure: An Introduction

Mainstream cinema typically makes use of the three-act structure in narrative design. This is the classical notion of a story that has a beginning, a middle, and an end.

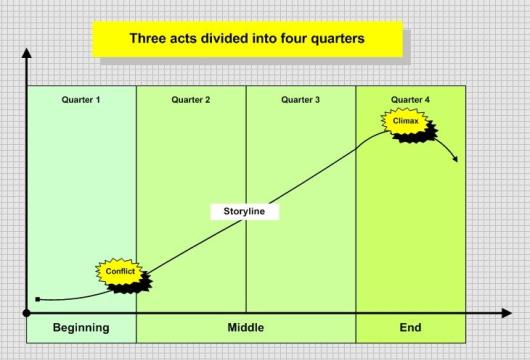

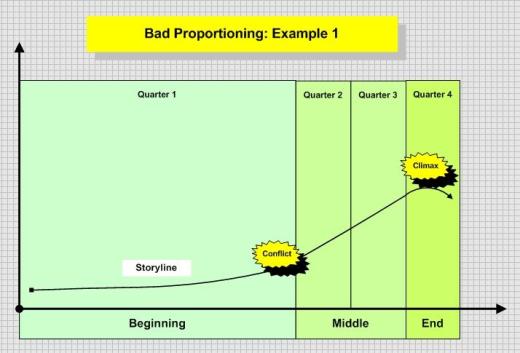

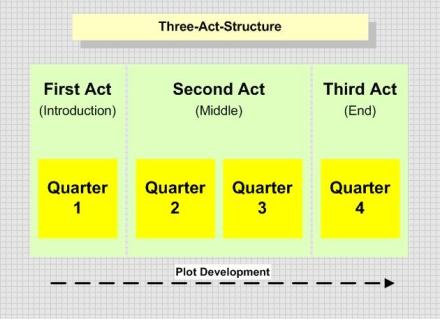

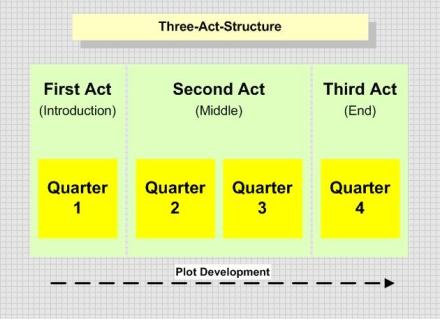

Another typical feature of mainstream cinema is a standard used in proportioning these three acts: The content will often be presented in four quarters of which one is allotted to the first act (beginning, or introducution), one to the third act (end, or final), and two to the second act (middle). Expressed through a diagram, the three-act structure built around four quarters looks like this:

The two quarters in the middle act are usually reserved for two secondary lines of action which are causally connected to the main plotline and gradually carry the story to the final act.

An example would be James Cameron’s feature film Terminator 2: Judgement Day (1991):

Quarter 1 (First Act): Set up of the plot, introduction of characters, planting of middle acts.

Quarter 2 (Second Act): The first of middle acts, in which the goal is to free Sarah Connor from the clinic in which she is being held.

Quarter 3 (Second Act): The second of the middle acts, in which the goal is to destroy Skynet and its infobase.

Quarter 4 (Third Act): Build-up to the climax, resolution and denouement.

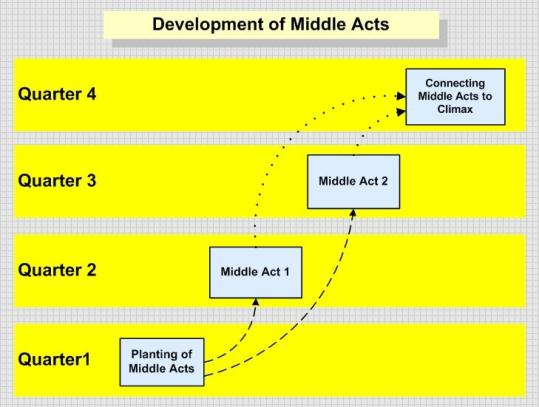

We can speak of a variety of goals for each of the acts (and quarters). See the diagram below:

The first quarter aims at catching the attention of the audience. A very common method for this is to use a teaser. This quarter’s main goal is to set up a conflict and lock it onto the protagonist. This will put her in a position where she has no other chance than dealing with the problem. Another important task for the narrative designer is to create identificiation between the protagonist and the audience.

The middle act with its two quarters functions like a bridge between the set-up and the final act. The main goal is to increase the initial tension by carrying forward the plot, meanwhile putting the character through a series of tests. Each of the two quarters end with an important moment for the character; first a deep crisis (low point) , then usually an awakening (peripetie, or turning point).

The final act is reserved for the climax. The way to the final enounter with the enemy is paved. The climax brings a resolution to the conflict. This is followed by the story wrap-up or denouement.

A more detailed picture of the dramatic structure of a typical movie would look like this then:

In this diagram, plot-related scenes/sequences are colored green, character-related scenes/sequences are beige, and secondary plotline related scenes/sequences are blue.

A typical thing in this kind of story structure is that an important turning point in the plot is always followed by an evaluation and reassessment of the new situation by the characters that have been infuenced. During reassessment the character will try to understand his current situation and then decide on a new course of action. We have usually three such moments of reassessment, plus a denouement at the end of the story, which will see us the protagonist state what she has learned from all this and how she’s going to spend her life from that point on.

It’s also important to note that the first two reassessments will usually lead to courses of action that will launch the secondary storylines (quarter 2 and 3) that make up the second act of the story.

Three Dynamics: Plot, Character and Secondary Storylines

While the diagram above list a number of functions and tasks for the narrative designer to deal with, it would be helpful to group these tasks around three intertwined dynamics that cut across and develop along the whole narrative structure. These are

1) Plot

2) Character

3) Secondary plotlines (middle acts)

Let’s have a look at them seperately.

Plot

Plot development requires us to do some heavy work in the first quarter. The problem must be thrown up, interest for it must be created, later on it must be intensified and finally turned into a conflict (an inescapable fact that the protagonist must deal with).

The second and third quarters develop the plot further via secondary storylines, but it is crucial that at the end of each of the middle quarters important milestones are reached in regard to plot. Quarter 2 usually ends with a low point: the protagonist loses his status, gets injured, cheated etc. His first attempt to overcome the conflict put him into even worse conditions. Quarter 3 usually ends with a turning point which will open a door to the build up of the climax. Once more the protagonist was in an attempt to overcome the problem, and once more she failed, but this time she makes a discovery that might bring things closer to a resolution.

The fourth quarter usually consists of a build-up to the climax. The plot development reaches its end with the culmination of the climax.

Character

Character development often takes place as the character reacts to change in her situation. This is a reciprocal process: The plot influences the character and forces her the reconsider her situation. The character then decides on a new course of action which will turn into plot.

Usually we come across three moments of reassessment of which all three come after the important turning points in plot development. The final stage of this change in character is the denouement, where the character draws a general conclusion for herself on what it meant to go through this plot and how life would be for her from now on.

Quarter 1 lays the character foundations. We find out about the traits and motifs of the characters and we are provided with the reasons to identify with the protagonist.

The first reassessment comes at the beginning of quarter 2, when the conflict is established and locked onto the character. The character must face a dramatic change in her situation and decide on a new course of action. Her decision will provide the bulk of action that makes up the first of the middle acts.

The second reassessment comes at the beginning of quarter 3, when she failed miserably in her first attempt to overcome the conflict and is now in even deeper trouble. Again she must make a decision to change her situation and the course of action she decides to take will form the bulk of the second of the middle acts.

The third reassessment comes at the beginning of quarter 4, when she failed once more in her attempt to overcome the conflict but has disovered something that might be useful in her next attempt. The course of action she decides for after her evaluation of the situation will usually pave the way to the ultimate encounter with the enemy, the climax.

The final reassessment is the Denouement. Since the plot has culminated in a climax and a resolution has been reached, the characters will state what they have learned from this experience. They will decide on a new course of action, one that reflects their learning, and then go on their way.

Secondary Plotlines

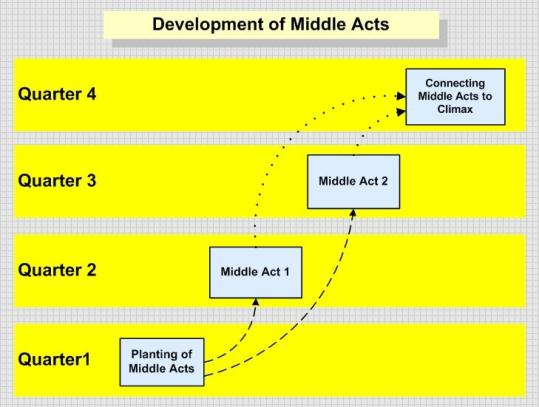

The secondary plotlines make up the middle acts of the story. Usually each of the quarters that make up the middle will deal with one central issue.

Quarter 1 lays the foundation for the two middle acts. We call this planting, since the seeds that will lead to those acts are places into the story structure early on.

In quarter 2 and 3 each one of the seeds will grow and form the bulk of significant action.

Quarter 4 will often result in the secondary plotlines being connected to the climax.

It’s the narrative designer’s task to intertwine all these three dynamics in a proportioned way that creates pace and rhythm. Further important concepts here are unity and increasing tension.

Structure and Dynamics in Classical Arcade Games

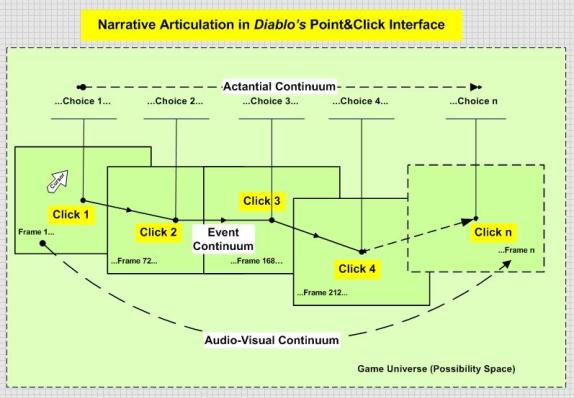

Despite many people arguing that games aren’t stories (or narratives), we see that game designers deal with issues in structure and dynamics similar to that in three-act structure. However, the medium as well as the business model might cause certain changes in the game designer’s approach.

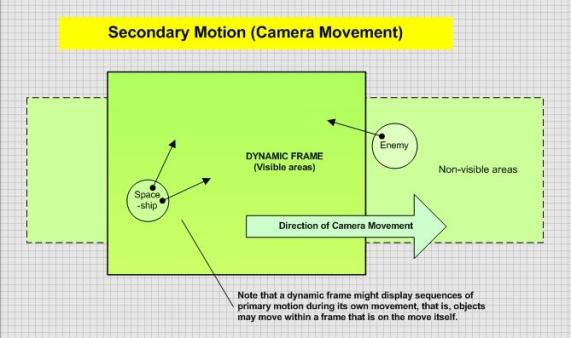

Let’s have a look at the typical structure of a classical coin-op arcade game:

Most of the time we are tempted to see game levels as the basic dramatic units. This might hold true for certain genres. However a closer look at coin-op arcade games reveals that what really counts iin these games is “life”.

A player can go through several levels with a life. This means that levels can be regarded as secondary goals to the primary goal: to achieve the best with the lives at our disposal. Each one of the lives can be regarded as an attempt to overcome the conflict (similar to the attempts that shape the quarters in the three-act structure). As our attempts fail and our lives run out, we come closer to the climax, the final act of the game. The last remaining life is usually perceived as the final act of the gameplay session. The pressure we experience here can be regarded as the equivalent of the climax in the three-act structure. The middle act of the game will often be prolongued by extra lives that we earn during our struggle. This makes it rather difficult to speak of a proportioning of the narrative around quarters. Yet it is not so useless to see a structure of three acts in this.

After each loss of life, a reassessment will follow. Often this will be an evaluation of the player performance: Calculation of kills, combos, bonuses, clean streaks, sector records and other progress indicators. Such reassessments between lives create rhythm and pare a way of pacing the game.

The acts and reassessment intervals are enveloped by a Demo and a High Scores screen. While the High Scores screen will function similar to the denouement of a movie, the Demo screen performs a lot of functions similar to that of the first act in a feature film: It will try to catch our attention, introduce characters and settings, foreshadow part of the gameplay action etc.

Another difference here is in regard to planting middle acts. Oftentimes such games make repeated use of the same game mechanisms. In other words it is difficult to speak of sequences specifically scripted to reach low points or peripeties. For example a game like Centipede or Asteroids! doesn’t have specifically designed middle acts. It’s basically the same type of system that revolves around us, just faster, or with new enemy types replacing others as the game proceeds. In other words, the need for planting as it is necessary in feature films does not exist here. We would rather encounter a demo screen that hints at the various types of enemies we will face during gameplay.

A major difference between feature films and video games is the need to introduce the player to the player vocabulary and allow her to adapt to controls. This is were the interaction element in games creates a task that narrative designers might not be acquainted with. This also brings with it the problem to get the player locked into the conflict without putting too much pressure on her since she needs her time to get used to the interaction requirements. A teaser in a feature film can push up the tension quite early and put the protagonist under big amounts of pressure right at the beginning of the story. Game designers must be much more careful when they push the player into the conflict. The task of the demo is often to show gameplay sequences in order to give the player a glimpse of what needs to be done. The first few levels will often be designed in a way that enable the player to learn to carry out the basic actions that the game demands to be played thoroughly. These are all tasks that are rather foreign to a narrative designer.

Conclusion

In this article I compared feature films and classical coin-op arcade games in regard to plot development, character growth and the planting of middle acts. I tried to understand in how far three-act structure as being used in feature films is useful in the design of video games.

In the context of the classical arcade coin-op game we found out that we can speak of a three act structure consisting of a cycle of acts and reassessments build around “lives”. However, the interactive nature of the medium as well as the use of the game system make it difficult to speak of proportioning build around quarters. The middle act, depending on the players performance might differ significantly. Further, we cannot really speak of scripted middle acts that culminate into carefully planned low points or peripeties. Often the game system will care for increasing tension by using the same mechanics in ways that are more difficult to cope with.

Also most of the functions and tasks that we see in three-act structure are distributed among video game-specific elements such as the Demo and the High Scores screen.

The most important difference however, especially in regard to the first act, is the need for an introduction of the player vocabulary. The player must be introduced to the affordances provided to her and given space to adapt to the interaction requirements of the game. This is a task that narrative designers with a background in film and TV writing will probably not be familiar with if it is their first game writing project.

Filed under: game studies, Writing | Tagged: arcade games, coin-op, feature film, three-act-structure | Leave a comment »